Classification with Imbalanced Datasets

This Website contains SCI2S research material on Classification with Imbalanced Datasets. This research is related to the following SCI2S work published recently:

V. López, A. Fernandez, S. Garcia, V. Palade and F. Herrera, An Insight into Classification with Imbalanced Data: Empirical Results and Current Trends on Using Data Intrinsic Characteristics. Information Sciences 250 (2013) 113-141 doi: 10.1016/j.ins.2013.07.007 ![]()

The web is organized according to the following summary:

- Introduction to Classification with Imbalanced Datasets

- Imbalanced Datasets in Classification

- Problems related with intrinsic data characteristics

- Addressing Classification with imbalanced data: preprocessing, cost-sensitive learning and ensemble techniques

- Software, Algorithm Implementations and Dataset Repository

- Literature review

Introduction to Classification with Imbalanced Datasets

In many supervised learning applications, there is a significant difference between the prior probabilities of different classes, i.e, between the probabilities with which an example belongs to the different classes of the classification problem. This situation is known as the class imbalance problem (N.V. Chawla, N. Japkowicz, A. Kotcz, Editorial: special issue on learning from imbalanced data sets, SIGKDD Explorations 6 (1) (2004) 1–6. doi: 10.1145/1007730.1007733, H. He, E.A. Garcia, Learning from imbalanced data, IEEE Transactions on Knowledge and Data Engineering 21 (9) (2009) 1263–1284. doi: 10.1109/TKDE.2008.239, Y. Sun, A.K.C. Wong, M.S. Kamel, Classification of imbalanced data: a review, International Journal of Pattern Recognition and Artificial Intelligence 23 (4) (2009) 687–719. doi: 10.1142/S0218001409007326) and it is common in many real problems from telecommunications, web, finance-world, ecology, biology, medicine not only, and which can be considered one of the top problems in data mining today (Q. Yang, X. Wu, 10 challenging problems in data mining research, International Journal of Information Technology and Decision Making 5 (4) (2006) 597–604. doi: 10.1142/S0219622006002258). Furthermore, it is worth to point out that the minority class is usually the one that has the highest interest from a learning point of view and it also implies a great cost when it is not well classified (C. Elkan, The foundations of cost–sensitive learning, in: Proceedings of the 17th IEEE International Joint Conference on Artificial Intelligence (IJCAI’01), 2001, pp. 973–978).

The hitch with imbalanced datasets is that standard classification learning algorithms are often biased towards the majority class (known as the "negative" class) and therefore there is a higher misclassification rate for the minority class instances (called the "positive" examples). Therefore, throughout the last years, many solutions have been proposed to deal with this problem, both for standard learning algorithms and for ensemble techniques (M. Galar, A. Fernández, E. Barrenechea, H. Bustince, F. Herrera, A review on ensembles for class imbalance problem: bagging, boosting and hybrid based approaches, IEEE Transactions on Systems, Man, and Cybernetics – part C: Applications and Reviews 42 (4) (2012) 463–484. doi: 10.1109/TSMCC.2011.2161285). They can be categorized into three major groups:

- Data sampling: In which the training instances are modified in such a way to produce a more or less balanced class distribution that allow classifiers to perform in a similar manner to standard classification (G.E.A.P.A. Batista, R.C. Prati, M.C. Monard, A study of the behaviour of several methods for balancing machine learning training data, SIGKDD Explorations 6 (1) (2004) 20–29. doi: 10.1145/1007730.1007735, N.V. Chawla, K.W. Bowyer, L.O. Hall, W.P. Kegelmeyer, SMOTE: synthetic minority over-sampling technique, Journal of Artificial Intelligent Research 16 (2002) 321–357. doi: 10.1613/jair.953).

- Algorithmic modification: This procedure is oriented towards the adaptation of base learning methods to be more attuned to class imbalance issues (B. Zadrozny, C. Elkan, Learning and making decisions when costs and probabilities are both unknown, in: Proceedings of the 7th International Conference on Knowledge Discovery and Data Mining (KDD’01), 2001, pp. 204–213.).

- Cost-sensitive learning: This type of solutions incorporate approaches at the data level, at the algorithmic level, or at both levels combined, considering higher costs for the misclassification of examples of the positive class with respect to the negative class, and therefore, trying to minimize higher cost errors (P. Domingos, Metacost: a general method for making classifiers cost–sensitive, in: Proceedings of the 5th International Conference on Knowledge Discovery and Data Mining (KDD’99), 1999, pp. 155–164., B. Zadrozny, J. Langford, N. Abe, Cost–sensitive learning by cost–proportionate example weighting, in: Proceedings of the 3rd IEEE International Conference on Data Mining (ICDM’03), 2003, pp. 435–442.).

Most of the studies on the behavior of several standard classifiers in imbalance domains have shown that significant loss of performance is mainly due to the skewed class distribution, given by the imbalance ratio (IR), defined as the ratio of the number of instances in the majority class to the number of examples in the minority class (V. García, J.S. Sánchez, R.A. Mollineda, On the effectiveness of preprocessing methods when dealing with different levels of class imbalance, Knowledge Based Systems 25 (1) (2012) 13–21. doi: 10.1016/j.knosys.2011.06.013, A. Orriols-Puig, E. Bernadó-Mansilla, Evolutionary rule-based systems for imbalanced datasets, Soft Computing 13 (3) (2009) 213–225. doi: 10.1007/s00500-008-0319-7). However, there are several investigations which also suggest that there are other factors that contribute to such performance degradation (N. Japkowicz, S. Stephen, The class imbalance problem: a systematic study, Intelligent Data Analysis Journal 6 (5) (2002) 429–450.). Therefore, as a second goal, we present a discussion about six significant problems related to data intrinsic characteristics and that must be taken into account in order to provide better solutions for correctly identifying both classes of the problem:

- The identification of areas with small disjuncts (G.M. Weiss, Mining with rare cases, in: O. Maimon, L. Rokach (Eds.), The Data Mining and Knowledge Discovery Handbook, Springer, 2005, pp. 765–776., G.M. Weiss, The impact of small disjuncts on classifier learning, in: R. Stahlbock, S.F. Crone, S. Lessmann (Eds.), Data Mining: Annals of Information Systems, vol. 8, Springer, 2010, pp. 193–226.).

- The lack of density and information in the training data (M. Wasikowski, X.-W. Chen, Combating the small sample class imbalance problem using feature selection, IEEE Transactions on Knowledge and Data Engineering 22 (10) (2010) 1388–1400. doi: 10.1109/TKDE.2009.187).

- The problem of overlapping between the classes (M. Denil, T. Trappenberg, Overlap versus imbalance, in: Proceedings of the 23rd Canadian Conference on Advances in Artificial Intelligence (CCAI’10), Lecture Notes on Artificial Intelligence, vol. 6085, 2010, pp. 220–231., V. García, R.A. Mollineda, J.S. Sánchez, On the k-NN performance in a challenging scenario of imbalance and overlapping, Pattern Analysis Applications 11 (3–4) (2008) 269–280. doi: 10.1007/s10044-007-0087-5).

- The impact of noisy data in imbalanced domains (C.E. Brodley, M.A. Friedl, Identifying mislabeled training data, Journal of Artificial Intelligence Research 11 (1999) 131–167. doi: 10.1613/jair.606, C. Seiffert, T.M. Khoshgoftaar, J. Van Hulse, A. Folleco, An empirical study of the classification performance of learners on imbalanced and noisy software quality data, Information Sciences (2013), In press. doi: 10.1016/j.ins.2010.12.016).

- The significance of the borderline instances to carry out a good discrimination between the positive and negative classes, and its relationship with noisy examples (D.J. Drown, T.M. Khoshgoftaar, N. Seliya, Evolutionary sampling and software quality modeling of high-assurance systems, IEEE Transactions on Systems, Man, and Cybernetics, Part A 39 (5) (2009) 1097–1107. doi: 10.1109/TSMCA.2009.2020804, K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC’10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167.).

- The possible differences in the data distribution for the training and test data, also known as the dataset shift (J.G. Moreno-Torres, X. Llorà, D.E. Goldberg, R. Bhargava, Repairing fractures between data using genetic programming-based feature extraction: a case study in cancer diagnosis, Information Sciences 222 (2013) 805–823. doi: 10.1016/j.ins.2010.09.018, H. Shimodaira, Improving predictive inference under covariate shift by weighting the log-likelihood function, Journal of Statistical Planning and Inference 90 (2) (2000) 227–244. doi: 10.1016/S0378-3758(00)00115-4).

This thorough study of the problem can guide us about the source where the difficulties for imbalanced classification emerge, focusing on the analysis of significant data intrinsic characteristics. Specifically, for each established scenario we show an experimental example on how it affects the behavior of the learning algorithms, in order to stress its significance.

We must point out that some of these topics have recent studies associated, examining their main contributions and recommendations. However, we emphasize that they still need to be addressed in more detail in order to have models of high quality in this classification scenario and, therefore, we have stressed them as future trends of research for imbalanced learning. Overcoming these problems can be the key for developing new approaches that improve the correct identification of both the minority and majority classes.

Imbalanced Datasets in Classification

The problem of imbalanced datasets

In the classification problem field, the scenario of imbalanced datasets appears frequently. The main property of this type of classification problem is that the examples of one class significantly outnumber the examples of the other one (H. He, E.A. Garcia, Learning from imbalanced data, IEEE Transactions on Knowledge and Data Engineering 21 (9) (2009) 1263–1284. doi: 10.1109/TKDE.2008.239, Y. Sun, A.K.C. Wong, M.S. Kamel, Classification of imbalanced data: a review, International Journal of Pattern Recognition and Artificial Intelligence 23 (4) (2009) 687–719. doi: 10.1142/S0218001409007326). The minority class usually represents the most important concept to be learned, and it is difficult to identify it since it might be associated with exceptional and significant cases (G.M. Weiss, Mining with rarity: a unifying framework, SIGKDD Explorations 6 (1) (2004) 7–19. doi: 10.1145/1007730.1007734), or because the data acquisition of these examples is costly (G.M. Weiss, Y. Tian, Maximizing classifier utility when there are data acquisition and modeling costs, Data Mining and Knowledge Discovery 17 (2) (2008) 253–282. doi: 10.1007/s10618-007-0082-x). In most cases, the imbalanced class problem is associated to binary classification, but the multi-class problem often occurs and, since there can be several minority classes, it is more difficult to solve (A. Fernández, V. López, M. Galar, M.J. del Jesus, F. Herrera, Analysing the classification of imbalanced data-sets with multiple classes: binarization techniques and ad-hoc approaches, Knowledge-Based Systems 42 (2013) 97–110. doi: 10.1016/j.knosys.2013.01.018, M. Lin, K. Tang, X. Yao, Dynamic sampling approach to training neural networks for multiclass imbalance classification, IEEE Transactions on Neural Networks and Learning Systems 24 (4) (2013) 647–660. doi: 10.1109/TNNLS.2012.2228231).

Since most of the standard learning algorithms consider a balanced training set, this may generate suboptimal classification models, i.e. a good coverage of the majority examples, whereas the minority ones are misclassified frequently. Therefore, those algorithms, which obtain a good behavior in the framework of standard classification, do not necessarily achieve the best performance for imbalanced datasets (A. Fernandez, S. García, J. Luengo, E. Bernadó-Mansilla, F. Herrera, Genetics-based machine learning for rule induction: state of the art, taxonomy and comparative study, IEEE Transactions on Evolutionary Computation 14 (6) (2010) 913–941. doi: 10.1109/TEVC.2009.2039140). There are several reasons behind this behavior:

- The use of global performance measures for guiding the learning process, such as the standard accuracy rate, may provide an advantage to the majority class.

- Classification rules that predict the positive class are often highly specialized and thus their coverage is very low, hence they are discarded in favor of more general rules, i.e. those that predict the negative class.

- Very small clusters of minority class examples can be identified as noise, and therefore they could be wrongly discarded by the classifier. On the contrary, few real noisy examples can degrade the identification of the minority class, since it has fewer examples to train with.

In recent years, the imbalanced learning problem has received much attention from the machine learning community. Regarding real world domains, the importance of the imbalance learning problem is growing, since it is a recurring issue in many applications. As some examples, we could mention very high resolution airbourne imagery (X. Chen, T. Fang, H. Huo, D. Li, Graph-based feature selection for object-oriented classification in VHR airborne imagery, IEEE Transactions on Geoscience and Remote Sensing 49 (1) (2011) 353–365. doi: 10.1109/TGRS.2010.2054832), forecasting of ozone levels (C.-H. Tsai, L.-C. Chang, H.-C. Chiang, Forecasting of ozone episode days by cost-sensitive neural network methods, Science of the Total Environment 407 (6) (2009) 2124–2135. doi: 10.1016/j.scitotenv.2008.12.007), face recognition (N. Kwak, Feature extraction for classification problems and its application to face recognition, Pattern Recognition 41 (5) (2008) 1718–1734. doi: 10.1016/j.patcog.2007.10.015), and especially medical diagnosis (R. Batuwita, V. Palade, microPred: effective classification of pre-miRNAs for human miRNA gene prediction, Bioinformatics 25 (8) (2009) 989–995. doi: 10.1093/bioinformatics/btp107, H.-Y. Lo, C.-M. Chang, T.-H. Chiang, C.-Y. Hsiao, A. Huang, T.-T. Kuo, W.-C. Lai, M.-H. Yang, J.-J. Yeh, C.-C. Yen, S.-D. Lin, Learning to improve area-under-FROC for imbalanced medical data classification using an ensemble method, SIGKDD Explorations 10 (2) (2008) 43–46. doi: 10.1145/1540276.1540290, M.A. Mazurowski, P.A. Habas, J.M. Zurada, J.Y. Lo, J.A. Baker, G.D. Tourassi, Training neural network classifiers for medical decision making: the effects of imbalanced datasets on classification performance, Neural Networks 21 (2–3) (2008). doi: 10.1016/j.neunet.2007.12.031, L. Mena, J.A. González, Symbolic one-class learning from imbalanced datasets: application in medical diagnosis, International Journal on Artificial Intelligence Tools 18 (2) (2009) 273–309. doi: 10.1142/S0218213009000135, Z. Wang, V. Palade, Building interpretable fuzzy models for high dimensional data analysis in cancer diagnosis, BMC Genomics 12 ((S2):S5) (2011). doi: 10.1186/1471-2164-12-S2-S5). It is important to remember that the minority class usually represents the concept of interest and it is the most difficult to obtain from real data, for example patients with illnesses in a medical diagnosis problem; whereas the other class represents the counterpart of that concept (healthy patients).

Evaluation in imbalanced domains

The evaluation criteria is a key factor in assessing the classification performance and guiding the classifier modeling. In a two-class problem, the confusion matrix (shown in Table 1) records the results of correctly and incorrectly recognized examples of each class.

| Positive prediction | Negative prediction | |

| Positive class | True Positive (TP) | False Negative (FN) |

| Negative class | False Positive (FP) | True Negative (TN) |

Traditionally, the accuracy rate (Equation 1) has been the most commonly used empirical measure. However, in the framework of imbalanced datasets, accuracy is no longer a proper measure, since it does not distinguish between the number of correctly classified examples of different classes. Hence, it may lead to erroneous conclusions, i.e., a classifier achieving an accuracy of 90% in a dataset with an IR value of 9 is not accurate if it classifies all examples as negatives.

$$Acc=\frac{TP+TN}{TP+FN+FP+TN} \ (Equation \ 1)$$

In imbalanced domains, the evaluation of the classifiers' performance must be carried out using specific metrics in order to take into account the class distribution. Concretely, we can obtain four metrics from Table 1 to measure the classification performance of both, positive and negative, classes independently:

- True positive rate: $TP_{rate}=\frac{TP}{TP+FN}$ is the percentage of positive instances correctly classified.

- True negative rate: $TN_{rate}=\frac{TN}{FP+TN}$ is the percentage of negative instances correctly classified.

- False positive rate: $FP_{rate}=\frac{FP}{FP+TN}$ is the percentage of negative instances misclassified.

- False negative rate: $FN_{rate}=\frac{FN}{TP+FN}$ is the percentage of positive instances misclassified.

Since in this classification scenario we intend to achieve good quality results for both classes, there is a necessity of combining the individual measures of both the positive and negative classes, as none of these measures alone is adequate by itself.

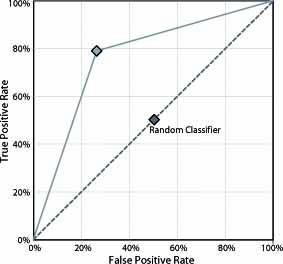

A well-known approach to unify these measures and to produce an evaluation criteria is to use the Receiver Operating Characteristic (ROC) graphic (A.P. Bradley, The use of the area under the roc curve in the evaluation of machine learning algorithms, Pattern Recognition 30 (7) (1997) 1145–1159. doi: 10.1016/S0031-3203(96)00142-2). This graphic allows the visualization of the trade-off between the benefits (TPrate) and costs (FPrate), as it evidences that any classifier cannot increase the number of true positives without also increasing the false positives. The Area Under the ROC Curve (AUC) (J. Huang, C.X. Ling, Using AUC and accuracy in evaluating learning algorithms, IEEE Transactions on Knowledge and Data Engineering 17 (3) (2005) 299–310. doi: 10.1109/TKDE.2005.50) corresponds to the probability of correctly identifying which one of the two stimuli is noise and which one is signal plus noise. The AUC provides a single measure of a classifier's performance for evaluating which model is better on average. Figure 1 shows how to build the ROC space plotting on a two-dimensional chart the TPrate (Y-axis) against the FPrate (X-axis). Points in (0,0) and (1,1) are trivial classifiers where the predicted class is always the negative and positive one, respectively. On the contrary, (0,1) point represents the perfect classifier. The AUC measure is computed just by obtaining the area of the graphic:

$$AUC=\frac{1+TP_{rate}-FP_{rate}}{2} \ (Equation \ 2)$$

Figure 1. Example of a ROC plot. Two classifiers' curves are depicted: the dashed line represents a random classifier, whereas the solid line is a classifier which is better than the random classifier

In R.C. Prati, G.E.A.P.A. Batista, M.C. Monard, A survey on graphical methods for classification predictive performance evaluation, IEEE Transactions on Knowledge and Data Engineering 23 (11) (2011) 1601–1618. doi: 10.1109/TKDE.2011.59, the significance of these graphical methods for the classification predictive performance evaluation is stressed. According to the authors, the main advantage of this type of methods resides in their ability to depict the trade-offs between evaluation aspects in a multidimensional space rather than reducing these aspects to an arbitrarily chosen (and often biased) single scalar measure. In particular, they present a review of several representation mechanisms emphasizing the best scenario for their use; for example, in imbalanced domains, when we are interested in the positive class, it is recommended the use of precision-recall graphs (J. Davis, M. Goadrich, The relationship between precisionrecall and ROC curves, in: Proceedings of the 23th International Conference on Machine Learning (ICML’06), ACM, 2006, pp. 233–240.). Furthermore, the expected cost or profit of each model might be analyzed using cost curves (C. Drummond, R.C. Holte, Cost curves: an improved method for visualizing classifier performance, Machine Learning 65 (1) (2006) 95–130. doi: 10.1007/s10994-006-8199-5), lift and ROI graphs (H.-Y. Lo, C.-M. Chang, T.-H. Chiang, C.-Y. Hsiao, A. Huang, T.-T. Kuo, W.-C. Lai, M.-H. Yang, J.-J. Yeh, C.-C. Yen, S.-D. Lin, Learning to improve area-under-FROC for imbalanced medical data classification using an ensemble method, SIGKDD Explorations 10 (2) (2008) 43–46. doi: 10.1145/1540276.1540290).

Other metric of interest to be stressed in this area is the geometric mean of the true rates (R. Barandela, J.S. Sánchez, V. García, E. Rangel, Strategies for learning in class imbalance problems, Pattern Recognition 36 (3) (2003) 849–851. doi: 10.1016/S0031-3203(02)00257-1), which can be defined as:

$$GM=\sqrt{\frac{TP}{TP+FN} · \frac{TN}{FP+TN}} \ (eQUATION 3)$$

This metric attempts to maximize the accuracy on each of the two classes with a good balance, being a performance metric that correlates both objectives. However, due to this symmetric nature of the distribution of the geometric mean over TPrate (sensitivity) and the TNrate (specificity), it is hard to contrast different models according to their precision on each class.

Another significant performance metric that is commonly used is the F-measure (R. Baeza-Yates, B. Ribeiro-Neto, Modern Information Retrieval, Addison Wesley, 1999):

$$F_m=\frac{(1+ \beta ^2)(PPV·TP_{rate})}{\beta ^2 PPV+TP_{rate}} \ (Equation \ 4)$$

$$PPV=\frac{TP}{TP+FP}$$

A popular choice for β is 1, where equal importance is assigned for both TPrate and the positive predictive value (PPV). This measure would be more sensitive to the changes in the PPV than to the changes in TPrate, which can lead to the selection of sub-optimal models.

According to the previous comments, some authors try to propose several measures for imbalanced domains in order to be able to obtain as much information as possible about the contribution of each class to the final performance and to take into account the IR of the dataset as an indication of its difficulty. For example, in R. Batuwita, V. Palade, AGm: a new performance measure for class imbalance learning. application to bioinformatics problems, in: Proceedings of the 8th International Conference on Machine Learning and Applications (ICMLA 2009), 2009, pp. 545–550. and R. Batuwita, V. Palade, Adjusted geometric-mean: a novel performance measure for imbalanced bioinformatics datasets learning, Journal of Bioinformatics and Computational Biology 10 (4) (2012). doi: 10.1142/S0219720012500035, the Adjusted G-mean is proposed. This measure is designed towards obtaining the highest sensitivity (TPrate) without decreasing too much the specificity (TNrate). This fact is measured with respect to the original model, i.e. the original classifier without addressing the class imbalance problem. Equation 5 shows its definition:

$$AGM=\frac{GM+TN_{rate}·(FP+TN)}{1+FP+TN}; \ If TP_{rate} > 0$$

$$AGM = 0: \ If TP_{rate}=0 \ (Equation \ 5)$$

Additionally, in V. García, R.A. Mollineda, J.S. Sánchez, A new performance evaluation method for two-class imbalanced problems, in: Proceedings of the Structural and Syntactic Pattern Recognition (SSPR’08) and Statistical Techniques in Pattern Recognition (SPR’08), Lecture Notes on Computer Science, vol. 5342, 2008, pp. 917–925. the authors presented a simple performance metric, called Dominance, which is aimed to point out the dominance or prevalence relationship between the positive class and the negative class, in the range [-1,+1] (Equation 6). Furthermore, it can be used as a visual tool to analyze the behavior of a classifier on a 2-D space from the joint perspective of global precision (Y-axis) and dominance (X-axis).

$$Dom=TP_{rate}-TN_{rate} \ (Equation \ 6)$$

The same authors, using the previous concept of dominance, define a new metric called Index of Balanced Accuracy (IBA) (V. García, R.A. Mollineda, J.S. Sánchez, Theoretical analysis of a performance measure for imbalanced data, in: 20th International Conference on Pattern Recognition (ICPR’10), 2010, pp. 617–620, V. García, R.A. Mollineda, J.S. Sánchez, Classifier performance assessment in two-class imbalanced problems, Internal Communication (2012)). IBA weights a performance measure, that aims to make it more sensitive for imbalanced domains. The weighting factor favors those results with moderately better classification rates on the minority class. IBA is formulated as follows:

$$IBA_{\alpha}(M)=(1+\alpha · Dom)M \ (Equation \ 7)$$

where (1 + α · Dom) is the weighting factor and M represents a performance metric. The objective is to moderately favor the classification models with higher prediction rate on the minority class (without underestimating the relevance of the majority class) by means of a weighted function of any plain performance evaluation measure.

A comparison regarding these evaluation proposals for imbalanced datasets is out of the scope of this web. For this reason, we refer any interested reader to find a deep experimental study in V. García, R.A. Mollineda, J.S. Sánchez, Classifier performance assessment in two-class imbalanced problems, Internal Communication (2012) and T. Raeder, G. Forman, N.V. Chawla, Learning from imbalanced data: evaluation matters, in: D.E. Holmes, L.C. Jain (Eds.), Data Mining: Found. and Intell. Paradigms, vol. ISRL 23, Springer-Verlag, 2012, pp. 315–331.

Problems related to intrinsic data characteristics

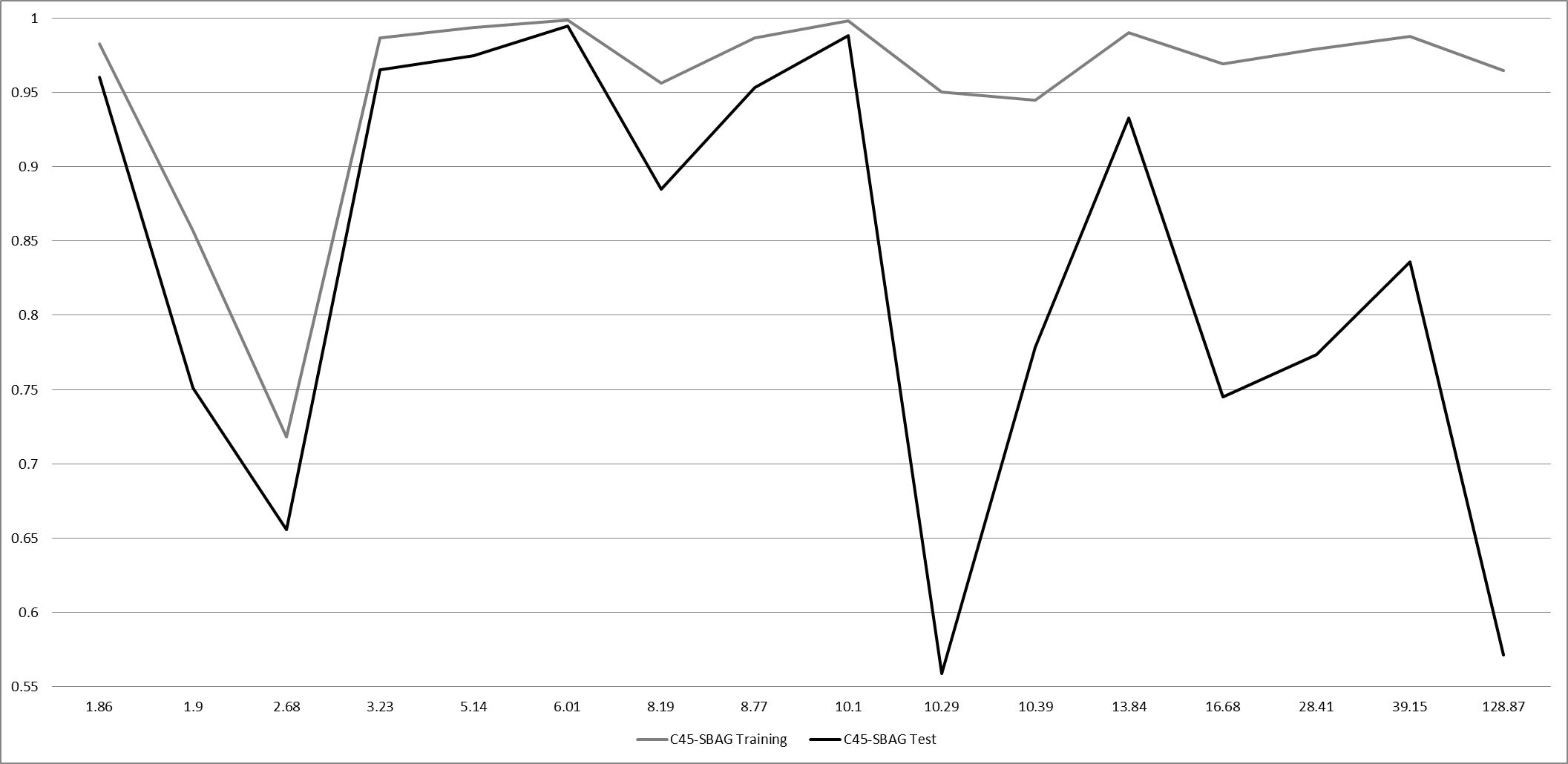

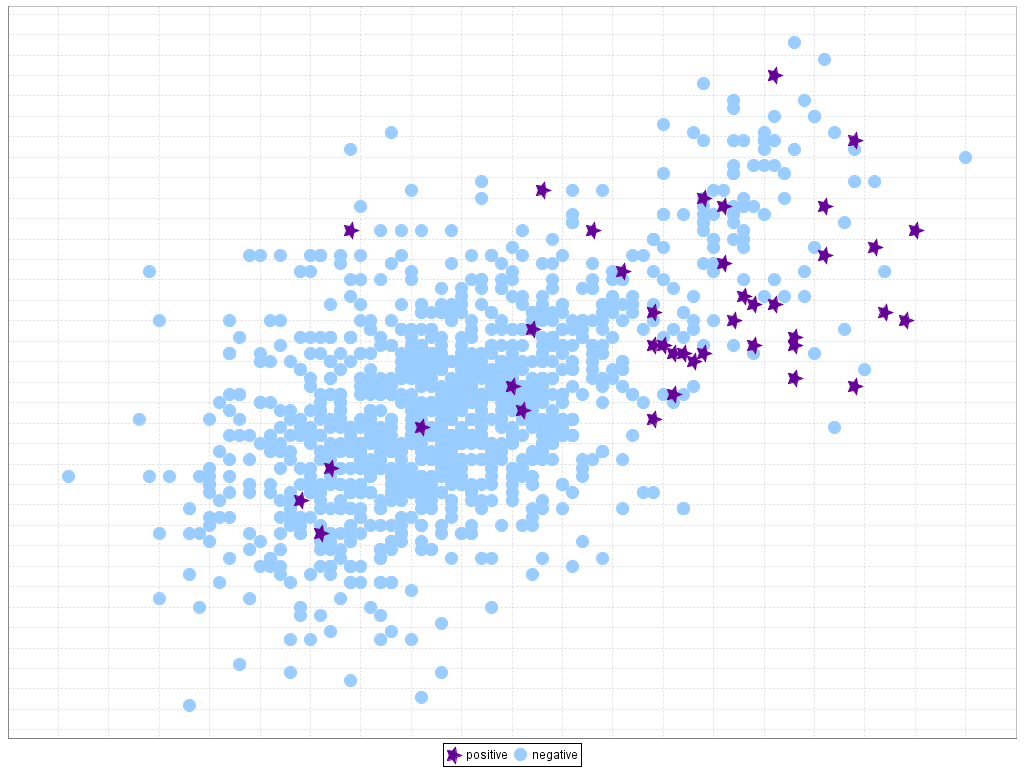

As it was stated in the introduction of this web, skewed class distributions do not hinder the learning task by itself (H. He, E.A. Garcia, Learning from imbalanced data, IEEE Transactions on Knowledge and Data Engineering 21 (9) (2009) 1263–1284. doi: 10.1109/TKDE.2008.239, Y. Sun, A.K.C. Wong, M.S. Kamel, Classification of imbalanced data: a review, International Journal of Pattern Recognition and Artificial Intelligence 23 (4) (2009) 687–719. doi: 10.1142/S0218001409007326), but usually a series of difficulties related with this problem turn up. This issue is depicted in Figure 2, in which we show the performance of the C4.5 decision tree with the SMOTEBagging ensemble (S. Wang, X. Yao, Diversity analysis on imbalanced data sets by using ensemble models, in: Proceedings of the 2009 IEEE Symposium on Computational Intelligence and Data Mining (CIDM'09), 2009, pp. 324–331) with 66 datasets with an IR ranging from 1.82 to 128.87, ordered according to the IR, in order to search for some regions of interesting good or bad behavior. As we can observe, there is no pattern of behavior for any range of IR, and the results can be poor both for low and high imbalanced data.

Figure 2. Performance in training and testing for the C4.5 decision tree with the SMOTEBagging ensemble as a function of IR

Related to this issue, in this section we aim to make a discussion on the nature of the problem itself, emphasizing several data intrinsic characteristics that do have a strong influence on imbalanced classification, in order to be able to address this problem in a more feasible way.

With this objective in mind, we focus our analysis on using the C4.5 classifier, in order to develop a basic but descriptive study by showing a series of patterns of behavior, following a kind of "educational scheme". With respect to the previous section, which was carried out in an empirical way, this part of the study is devoted to enumerating the scenarios that can be found when dealing with classification with imbalanced data, emphasizing their main issues that will allow us to design a better algorithm that can be adapted to different niches of the problem.

We acknowledge that some of the data intrinsic characteristics described along this section share some features and it is usual that, for a given dataset, several "sub-problems" can be found simultaneously. Nevertheless, we consider a simplified view of all these scenarios to serve as a global introduction to the topic.

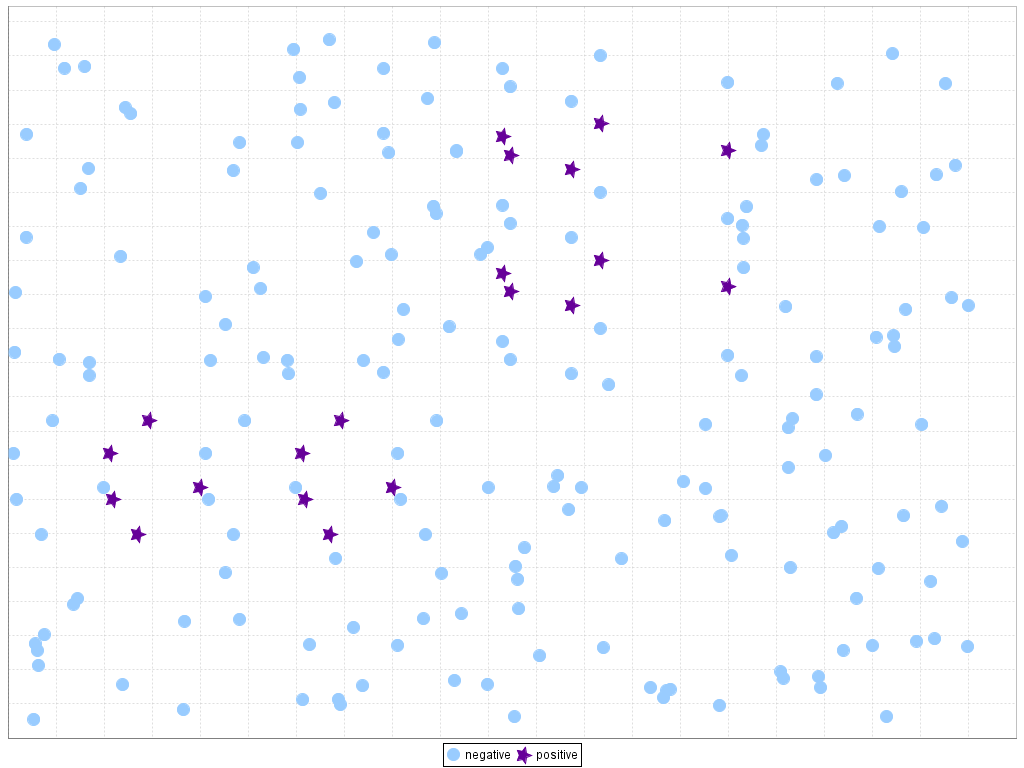

Small disjuncts

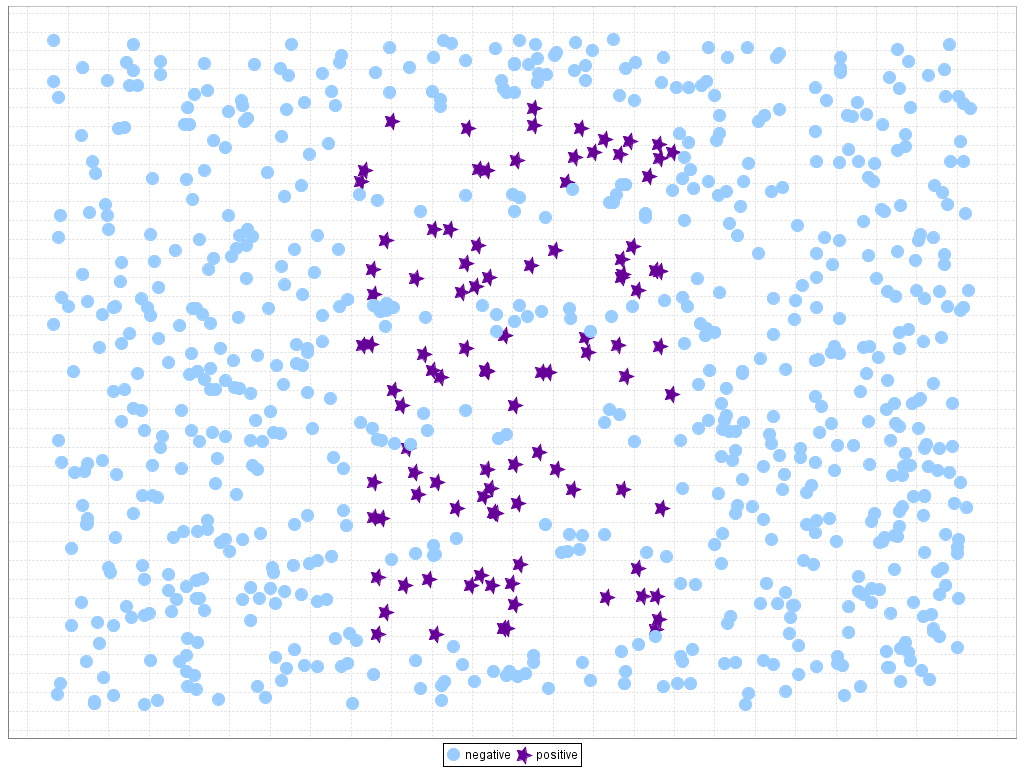

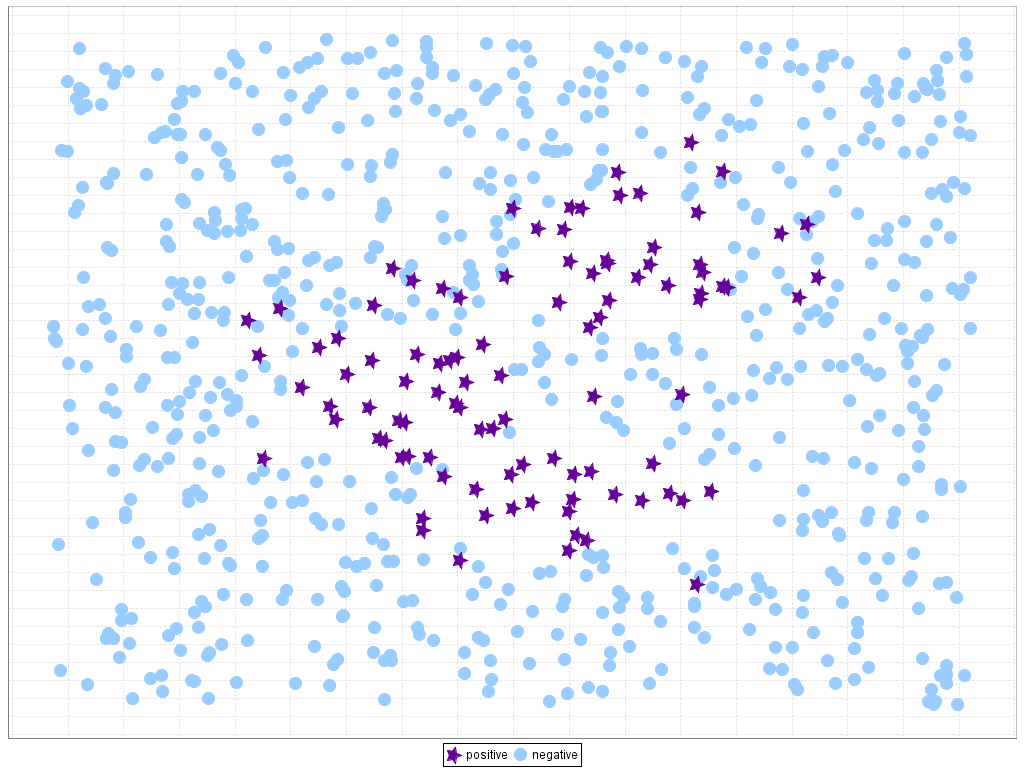

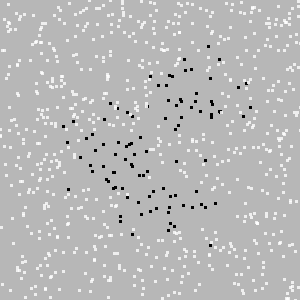

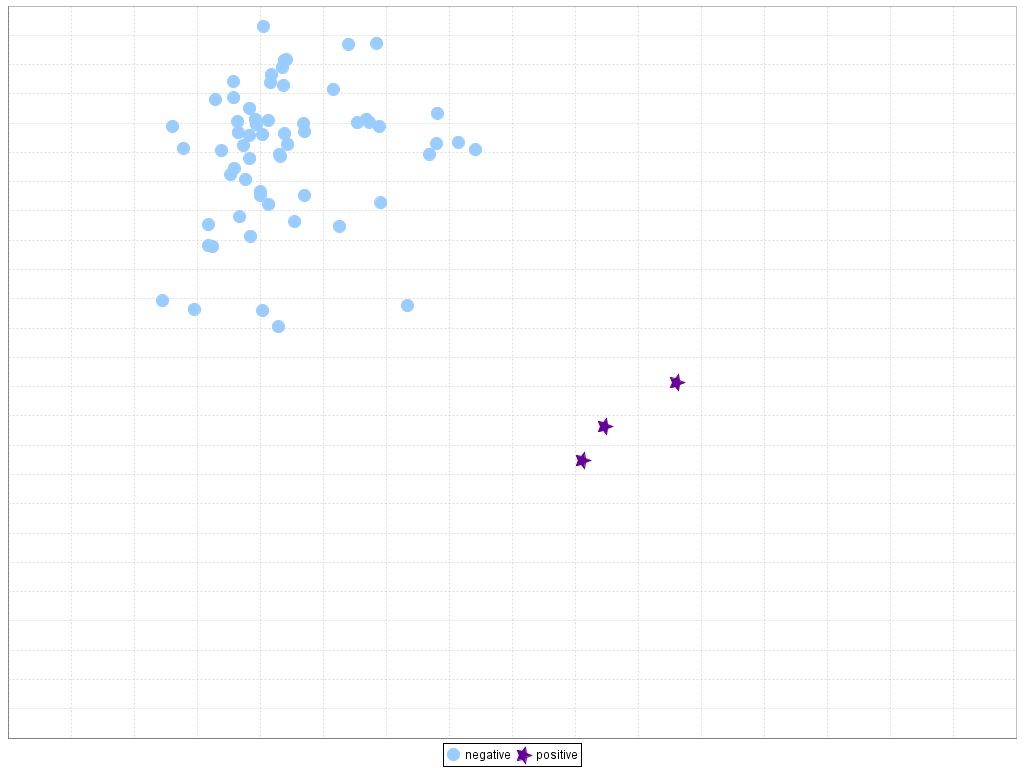

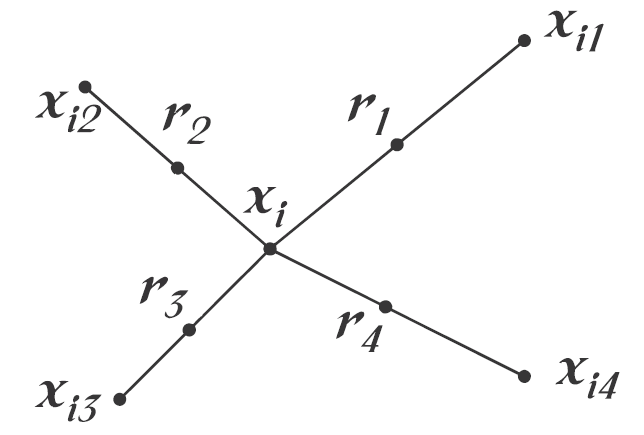

The presence of the imbalanced classes is closely related to the problem of small disjuncts. This situation occurs when the concepts are represented within small clusters, which arise as a direct result of underrepresented subconcepts (A. Orriols-Puig, E. Bernadó-Mansilla, D.E. Goldberg, K. Sastry, P.L. Lanzi, Facetwise analysis of XCS for problems with class imbalances, IEEE Transactions on Evolutionary Computation 13 (2009) 260–283. doi: 10.1109/TEVC.2009.2019829, G.M. Weiss, F.J. Provost, Learning when training data are costly: the effect of class distribution on tree induction, Journal of Artificial Intelligence Research 19 (2003) 315–354. doi: 10.1613/jair.1199). Although those small disjuncts are implicit in most of the problems, the existence of this type of areas highly increases the complexity of the problem in the case of class imbalance, because it becomes hard to know whether these examples represent an actual subconcept or are merely attributed to noise (T. Jo, N. Japkowicz, Class imbalances versus small disjuncts, ACM SIGKDD Explorations Newsletter 6 (1) (2004) 40–49 doi: 10.1145/1007730.1007737). This situation is represented in Figure 3, where we show an artificially generated dataset with small disjuncts for the minority class and the "Subclus" problem created in K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167, where we can find small disjuncts for both classes: the negative samples are underrepresented with respect to the positive samples in the central region of positive rectangular areas, while the positive samples only cover a small part of the whole dataset and are placed inside the negative class. We must point out that, in all figures of this web, positive instances are represented with dark stars whereas negative instances are depicted with light circles.

Figure 3. Example of small disjuncts on imbalanced data

The problem of small disjuncts becomes accentuated for those classification algorithms which are based on a divide-and-conquer approach (G.M. Weiss, Mining with rarity: a unifying framework, SIGKDD Explorations 6 (1) (2004) 7–19. doi: 10.1145/1007730.1007734). This methodology consists in subdividing the original problem into smaller ones, such as the procedure used in decision trees, and can lead to data fragmentation (J.H. Friedman, R. Kohavi, Y. Yun, Lazy decision trees, in: Proceedings of the AAAI/IAAI, vol. 1, 1996, pp. 717–724), that is, to obtain several partitions of data with a few representation of instances. If the IR of the data is high, this handicap is obviously more severe.

Several studies by Weiss (G.M. Weiss, Mining with rare cases, in: O. Maimon, L. Rokach (Eds.), The Data Mining and Knowledge Discovery Handbook, Springer, 2005, pp. 765–776, G.M. Weiss, The impact of small disjuncts on classifier learning, in: R. Stahlbock, S.F. Crone, S. Lessmann (Eds.), Data Mining: Annals of Information Systems, vol. 8, Springer, 2010, pp. 193–226) analyze this factor in depth and enumerate several techniques for handling the problem of small disjuncts:

- Obtain additional training data. The lack of data can induce the apparition of small disjuncts, especially in the minority class, and these areas may be better covered just by employing an informed sampling scheme (N. Japkowicz, Concept-learning in the presence of between-class and within-class imbalances, in: E. Stroulia, S. Matwin (Eds.), Proceedings of the 14th Canadian Conference on Advances in Artificial Intelligence (CCAI'08), Lecture Notes in Computer Science, vol. 2056, Springer, 2001, pp. 67–77).

- Use a more appropriate inductive bias. If we aim to be able to properly detect the areas of small disjuncts, some sophisticated mechanisms must be employed for avoiding the preference for the large areas of the problem. For example, R.C. Holte, L. Acker, B.W. Porter, Concept learning and the problem of small disjuncts, in: Proceedings of the International Joint Conferences on Artificial Intelligence, IJCAI'89, 1989, pp. 813–818 modified CN2 so that its maximum generality bias is used only for large disjuncts, and a maximum specificity bias was then used for small disjuncts. However, this approach also degrades the performance of the small disjuncts, and some authors proposed to refine the search and to use different learners for the examples that fall in the large disjuncts and on the small disjuncts separately (D.R. Carvalho, A.A. Freitas, A hybrid decision tree/genetic algorithm method for data mining, Information Sciences 163 (1–3) (2004) 13–35. doi: 10.1016/j.ins.2003.03.013, K.M. Ting, The problem of small disjuncts: its remedy in decision trees, in: Proceedings of the 10th Canadian Conference on Artificial Intelligence (CCAI'94), 1994, pp. 91–97).

- Using more appropriate metrics. This issue is related to the previous one in the sense that, for the data mining process, it is recommended to use specific measures for imbalanced data, in a way that the minority classes in the small disjuncts are positively weighted when obtaining the classification model (G.M. Weiss, Timeweaver: a genetic algorithm for identifying pre-dictive patterns in sequences of events, in: W. Banzhaf, J. Daida, A.E. Eiben, M.H. Garzon, V. Honavar, M. Jakiela, R.E. Smith (Eds.), Proceedings of the Genetic and Evolutionary Computation Conference GECCO'99, vol. 1, Morgan Kaufmann, Orlando, Florida, USA, 1999, pp. 718–725). For example, the use of precision and recall for the minority and majority classes, respectively, can lead to generate more precise rules for the positive class (P. Ducange, B. Lazzerini, F. Marcelloni, Multi-objective genetic fuzzy classifiers for imbalanced and cost-sensitive datasets, Soft Computing 14 (7) (2010) 713–728. doi: 10.1007/s00500-009-0460-y, M.V. Joshi, V. Kumar, R.C. Agarwal, Evaluating boosting algorithms to classify rare classes: comparison and improvements, in: Proceedings of the 2001 IEEE International Conference on Data Mining (ICDM'01), IEEE Computer Society, Washington, DC, USA, 2001, pp. 257–264).

- Disabling pruning. Pruning tends to eliminate most small disjuncts by a generalization of the obtained rules. Therefore, this methodology is not recommended.

- Employ boosting. Boosting algorithms, such as the AdaBoost algorithm, are iterative algorithms that place different weights on the training distribution each iteration (R.E. Schapire, A brief introduction to boosting, in: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI'99), 1999, pp. 1401–1406). Following each iteration, boosting increases the weights associated with the incorrectly classified examples and decreases the weights associated with the correctly classified examples. Because instances in the small disjuncts are known to be difficult to predict, it is reasonable to believe that boosting will improve their classification performance. Following this idea, many approaches have been developed by modifying the standard boosting weight-update mechanism in order to improve the performance on the minority class and the small disjuncts (N.V. Chawla, A. Lazarevic, L.O. Hall, K.W. Bowyer, SMOTEBoost: Improving prediction of the minority class in boosting, in: Proceedings of 7th European Conference on Principles and Practice of Knowledge Discovery in Databases (PKDD'03), 2003, pp. 107–119, W. Fan, S.J. Stolfo, J. Zhang, P.K. Chan, Adacost: misclassification cost-sensitive boosting, in: Proceedings of the 16th International Conference on Machine Learning (ICML'96), Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 1999, pp. 97–105, H. Guo, H.L. Viktor, Learning from imbalanced data sets with boosting and data generation: the DataBoost-IM approach, SIGKDD Explorations Newsletter 6 (2004) 30–39. doi: 10.1145/1007730.1007736, S. Hu, Y. Liang, L. Ma, Y. He, MSMOTE: improving classification performance when training data is imbalanced, in: Proceedings of the 2nd International Workshop on Computer Science and Engineering (WCSE'09), vol. 2, 2009, pp. 13–17, M.V. Joshi, V. Kumar, R.C. Agarwal, Evaluating boosting algorithms to classify rare classes: comparison and improvements, in: Proceedings of the 2001 IEEE International Conference on Data Mining (ICDM'01), IEEE Computer Society, Washington, DC, USA, 2001, pp. 257–264, C. Seiffert, T.M. Khoshgoftaar, J. Van Hulse, A. Napolitano, RUSBoost: a hybrid approach to alleviating class imbalance, IEEE Transactions on System, Man and Cybernetics A 40 (1) (2010) 185–197. doi: 10.1109/TSMCA.2009.2029559, Y. Sun, M.S. Kamel, A.K.C. Wong, Y. Wang, Cost-sensitive boosting for classification of imbalanced data, Pattern Recognition 40 (12) (2007) 3358–3378. doi: 10.1016/j.patcog.2007.04.009, K.M. Ting, A comparative study of cost-sensitive boosting algorithms, in: Proceedings of the 17th International Conference on Machine Learning (ICML'00), Stanford, CA, USA, 2000, pp. 983–990).

Finally, we must emphasize the use of the CBO method (T. Jo, N. Japkowicz, Class imbalances versus small disjuncts, ACM SIGKDD Explorations Newsletter 6 (1) (2004) 40–49. doi: 10.1145/1007730.1007737), which is a resampling strategy that is used to counteract simultaneously the between-class imbalance and the within-class imbalance. Specifically, this approach detects the clusters in the positive and negative classes using the k-means algorithm in a first step. In a second step, it randomly replicates the examples for each cluster (except the largest negative cluster) in order to obtain a balanced distribution between clusters from the same class and between classes. These clusters can be viewed as small disjuncts in the data, and therefore this preprocessing mechanism is aimed to stress the significance of these regions.

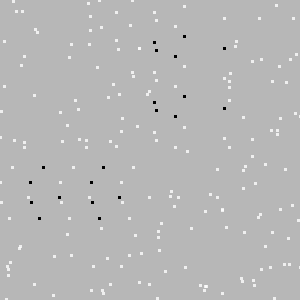

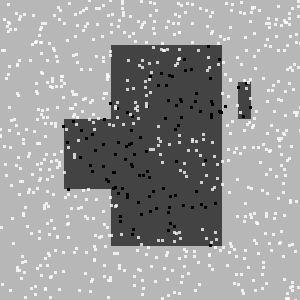

In order to show the goodness of this approach, we depict a short analysis on the two previously presented artificial datasets, that is, our artificial problem and the Subclus dataset, studying the behavior of the C4.5 classifier according to both the differences in performance between the original and the preprocessed data and the boundaries obtained in each case. We must point out that the whole dataset is used in both cases.

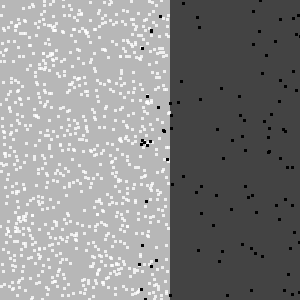

Table 2 shows the results of C4.5 in each case, where we must emphasize that the application of CBO enables the correct identification of all the examples for both classes. Regarding the visual output of the C4.5 classifier (Figure 4), in the first case we observe that for the original data no instances of the positive class are recognized, and that there is an overgeneralization of the negative instances, whereas the CBO method achieves the correct identification of the four clusters in the data, by replicating an average of 11.5 positive examples and 1.25 negative examples. In the Subclus problem, there is also an overgeneralization for the original training data, but in this case we found that the small disjuncts of the negative class surrounding the positive instances are the ones which are misclassified now. Again, the application of the CBO approach results on a perfect classification for all data, having 7.8 positive instances for each "data point" and 1.12 negative ones.

| Dataset | Original Data | Preprocessed Data with CBO | ||||

| TPrate | TNrate | AUC | TPrate | TNrate | AUC | |

| Artificial dataset | 0.0000 | 1.0000 | 0.5000 | 1.0000 | 1.0000 | 1.0000 |

| Subclus dataset | 1.0000 | 0.9029 | 0.9514 | 1.0000 | 1.0000 | 1.0000 |

Figure 4. Boundaries obtained by C4.5 with the original and preprocessed data using CBO for addressing the problem of small disjuncts. The new instances for CBO are just replicates of the initial examples

Lack of density

One problem that can arise in classification is the small sample size (S.J. Raudys, A.K. Jain, Small sample size effects in statistical pattern recognition: recommendations for practitioners, IEEE Transactions on Pattern Analysis and Machine Intelligence 13 (3) (1991) 252–264. doi: 10.1109/34.75512). This issue is related to the "lack of density" or "lack of information", where induction algorithms do not have enough data to make generalizations about the distribution of samples, a situation that becomes more difficult in the presence of high dimensional and imbalanced data. A visual representation of this problem is depicted in Figure 5, where we show a scatter plot for the training data of the yeast4 problem (attributes mcg vs. gvh) only with a 10% of the original instances and with all the data. We can appreciate that it becomes very hard for the learning algorithm to obtain a model that is able to perform a good generalization when there is not enough data that represents the boundaries of the problem and, what it is also most significant, when the concentration of minority examples is so low that they can be simply treated as noise.

Figure 5. Lack of density or small sample size on the yeast4 dataset

The combination of imbalanced data and the small sample size problem presents a new challenge to the research community (M. Wasikowski, X.-W. Chen, Combating the small sample class imbalance problem using feature selection, IEEE Transactions on Knowledge and Data Engineering 22 (10) (2010) 1388–1400. doi: 10.1109/TKDE.2009.187). In this scenario, the minority class can be poorly represented and the knowledge model to learn this data space becomes too specific, leading to overfitting. Furthermore, as stated in the previous section, the lack of density in the training data may also cause the introduction of small disjuncts. Therefore, two datasets can not be considered to present the same complexity because they have the same IR, as it is also important how the training data represents the minority instances.

In G.M. Weiss, F.J. Provost, Learning when training data are costly: the effect of class distribution on tree induction, Journal of Artificial Intelligence Research 19 (2003) 315–354. doi: 10.1613/jair.1199, the authors have studied the effect of class distribution and training-set size on the classifier performance using C4.5 as base learning algorithm. Their analysis consisted in varying both the available training data and the degree of imbalance for several datasets and observing the differences for the AUC metric in those cases.

The first finding they extracted is somehow quite trivial, that is, the higher the number of training data, the better the performance results are, independently of the class distribution. A second important fact that they highlighted is that the IR that yields the best performances occasionally vary from one training-set size to another, giving the support to the notion that there may be a "best" marginal class distribution for a learning task and suggests that a progressive sampling algorithm may be useful in locating the class distribution that yields the best, or nearly best, classifier performance.

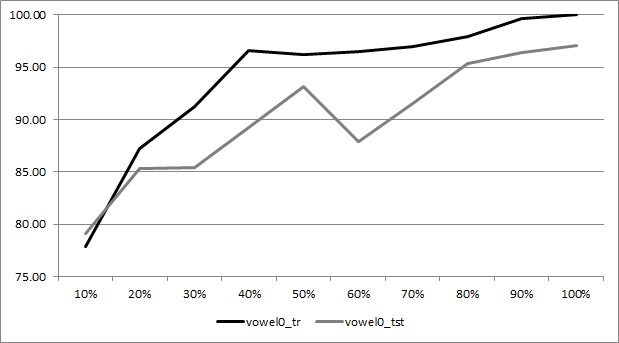

In order to visualize the effect of the density of examples in the learning process, in Figure 6 we show the results in AUC for the C4.5 classifier both for training (black line) and testing (grey line) for the vowel0 problem, varying the percentage of training instances from 10% to the original training size. This short experiment is carried out on a 5-fold cross validation, where the test data is not modified, i.e. in all cases it represents a 20% of the original data; the results shown are the average of the five partitions.

Figure 6. AUC performance for the C4.5 classifier with respect to the proportion of examples in the training set for the vowel0 problem

From this graph, we may distinguish a growth rate directly proportional to the number of training instances that are being used. This behavior reflects the findings enumerated previously from G.M. Weiss, F.J. Provost, Learning when training data are costly: the effect of class distribution on tree induction, Journal of Artificial Intelligence Research 19 (2003) 315–354. doi: 10.1613/jair.1199.

Class separability

The problem of overlapping between classes appears when a region of the data space contains a similar quantity of training data from each class. This situation leads to develop an inference with almost the same a priori probabilities in this overlapping area, which makes very hard or even impossible the distinction between the two classes. Indeed, any "linearly separable" problem can be solved by any simple classifier regardless of the class distribution.

There are several works which aim to study the relationship between overlapping and class imbalance. Particularly, in R.C. Prati, G.E.A.P.A., Batista, Class imbalances versus class overlapping: an analysis of a learning system behavior, in: Proceedings of the 2004 Mexican International Conference on Artificial Intelligence (MICAI'04), 2004, pp. 312–321 one can find a study where the authors propose several experiments with synthetic datasets varying the imbalance ratio and the overlap existing between the two classes. Their conclusions stated that the class probabilities are not the main responsibles for the hinder in the classification performance, but instead the degree of overlapping between the classes.

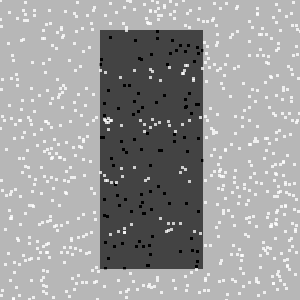

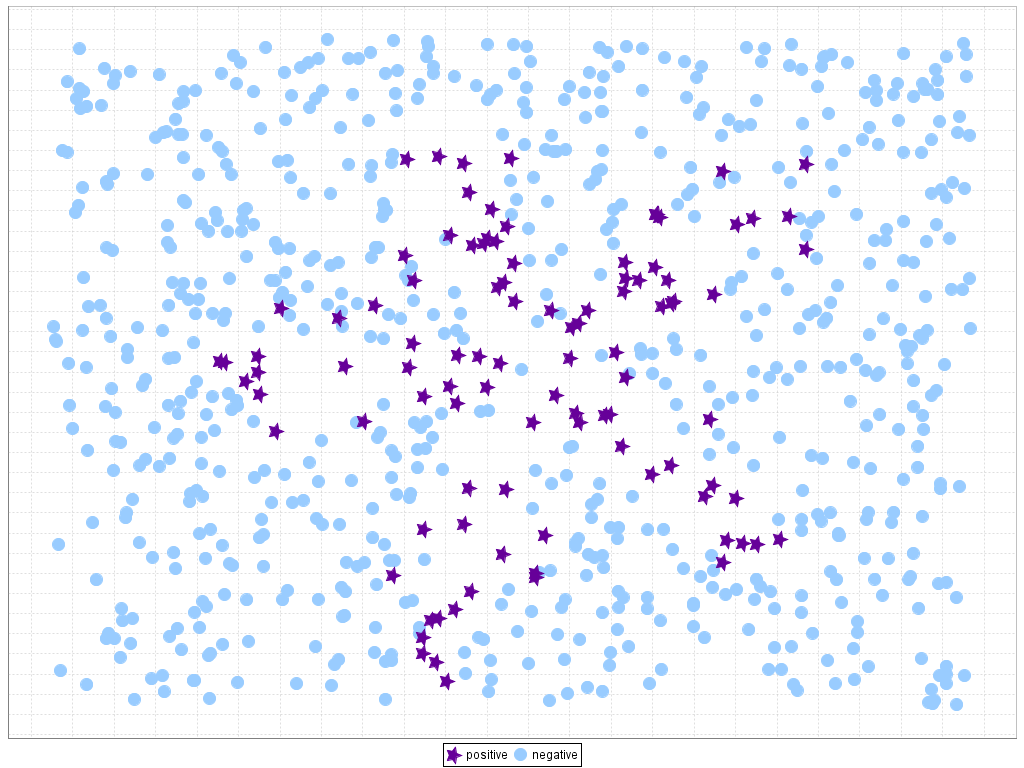

To reproduce the example for this scenario, we have created an artificial dataset with 1,000 examples having an IR of 9, i.e. 1 positive instance per 10 instances. Then, we have varied the degree of overlap for individual feature values, from no overlap to 100% of overlap, and we have used the C4.5 classifier in order to determine the influence of overlapping with respect to a fixed IR. First, Table 3 shows the results for the considered cases, where we observe that the performance is highly degrading with the increase of the overlap. Additionally, Figure 7 shows this issue, where we can observe that the decision tree is not only unable to obtain a correct discrimination between both classes when they are overlapped, but also that the preferred class is the majority one, leading to low values for the AUC metric.

| Overlap Degree (%) | TPrate | TNrate | AUC |

| 0% | 1.0000 | 1.0000 | 1.0000 |

| 20% | 0.7900 | 1.0000 | 0.8950 |

| 40% | 0.4900 | 1.0000 | 0.7450 |

| 50% | 0.4700 | 1.0000 | 0.7350 |

| 60% | 0.4200 | 1.0000 | 0.7100 |

| 80% | 0.2100 | 0.9989 | 0.6044 |

| 100% | 0.0000 | 1.0000 | 0.5000 |

Figure 7. Example of overlapping imbalanced datasets: boundaries detected by C4.5

Additionally, in V. García, R.A. Mollineda, J.S. Sánchez, On the k-NN performance in a challenging scenario of imbalance and overlapping, Pattern Analysis Applications 11 (3–4) (2008) 269–280. doi: 10.1007/s10044-007-0087-5, a similar study with several algorithms in different situations of imbalance and overlap focusing on the the kNN algorithm was developed. In this case, the authors proposed two different frameworks: on the one hand, they try to find the relation when the imbalance ratio in the overlap region is similar to the overall imbalance ratio whereas, on the other hand, they search for the relation when the imbalance ratio in the overlap region is inverse to the overall one (the positive class is locally denser than the negative class in the overlap region). They showed that when the overlapped data is not balanced, the IR in overlapping can be more important than the overlapping size. In addition, classifiers using a more global learning procedure attain greater TP rates whereas more local learning models obtain better TN rates than the former.

In M. Denil, T. Trappenberg, Overlap versus imbalance, in: Proceedings of the 23rd Canadian Conference on Advances in Artificial Intelligence (CCAI'10), Lecture Notes on Artificial Intelligence, vol. 6085, 2010, pp. 220–231, the authors examine the effects of overlap and imbalance on the complexity of the learned model and demonstrate that overlapping is a far more serious factor than imbalance in this respect. They demonstrate that these two problems acting in concert cause difficulties that are more severe than one would expect by examining their effects in isolation. In order to do so, they also use synthetic datasets for classifying with a SVM, where they vary the imbalance ratio, the overlap between classes and the imbalance ratio and overlap jointly. Their results show that, when the training set size is small, high levels of imbalance cause a dramatic drop in classifier performance, explained by the presence of small disjuncts. Overlapping classes cause a consistent drop in performance regardless of the size of the training set. However, with overlapping and imbalance combined, the classifier performance is degraded significantly beyond what the model predicts.

In one of the latest researches on the topic J. Luengo, A. Fernández, S. García, F. Herrera, Addressing data complexity for imbalanced data sets: analysis of SMOTE-based oversampling and evolutionary undersampling, Soft Computing 15 (10) (2011) 1909–1936. doi: 10.1007/s00500-010-0625-8, the authors have empirically extracted some interesting findings on real world datasets. Specifically, the authors depicted the performance of the different datasets ordered according to different data complexity measures (including the IR) in order to search for some regions of interesting good or bad behavior. They could not characterize any interesting behavior related to IR, but they do for other metrics that measure the overlap between the classes.

Finally, in R. Martín-Félez, R.A., Mollineda, On the suitability of combining feature selection and resampling to manage data complexity, in: Proceedings of the Conferencia de la Asociacion Española de Inteligencia Artificial (CAEPIA'09), Lecture Notes on Artificial Intelligence, vol. 5988, 2010, pp. 141–150, an approach that combines preprocessing and feature selection (strictly in this order) is proposed. This approach works in a way where preprocessing deals with class distribution and small disjuncts and feature selection somehow reduces the degree of overlapping. In a more general way, the idea behind this approach tries to overcome different sources of data complexity such as the class overlap, irrelevant and redundant features, noisy samples, class imbalance, low ratios of the sample size to dimensionality and so on, using different approaches used to solve each complexity.

Noisy data

Noisy data is known to affect the way any data mining system behaves (C.E. Brodley, M.A. Friedl, Identifying mislabeled training data, Journal of Artificial Intelligence Research 11 (1999) 131–167. doi: 10.1613/jair.606, J.A. Sáez, J. Luengo, F. Herrera, A first study on the noise impact in classes for fuzzy rule based classification systems, in: Proceedings of the 2010 IEEE International Conference on Intelligent Systems and Knowledge Engineering (ISKE'10), IEEE Press, 2010, pp. 153–158, X. Zhu, X. Wu, Class noise vs. attribute noise: a quantitative study, Artificial Intelligence Review 22 (3) (2004) 177–210. doi: 10.1007/s10462-004-0751-8). Focusing on the scenario of imbalanced data, the presence of noise has a greater impact on the minority classes than on usual cases (G.M. Weiss, Mining with rarity: a unifying framework, SIGKDD Explorations 6 (1) (2004) 7–19. doi: 10.1145/1007730.1007734); since the positive class has fewer examples to begin with, it will take fewer "noisy" examples to impact the learned subconcept. This issue is depicted in Figure 8, in which we can observe the decision boundaries obtained with SMOTE+C4.5 in the Subclus problem without noisy data and how the frontiers between the classes are wrongly generated by introducing a 20% gaussian noise.

Figure 8. Example of the effect of noise in imbalanced datasets for SMOTE+C4.5 in the Subclus dataset

According to G.M. Weiss, Mining with rarity: a unifying framework, SIGKDD Explorations 6 (1) (2004) 7–19. doi: 10.1145/1007730.1007734, these "noise-areas" can be somehow viewed as "small disjuncts" and in order to avoid the erroneous generation of discrimination functions for these examples, some overfitting management techniques must be employed, such as pruning. However, the handicap of this methodology is that some correct minority classes will be ignored and, in this manner, the bias of the learner should be tuned-up in order to be able to provide a good global behavior for both classes of the problem.

For example, Batuwita and Palade developed the FSVM-CIL algorithm (R. Batuwita, V. Palade, FSVM-CIL: fuzzy support vector machines for class imbalance learning, IEEE Transactions on Fuzzy Systems 18 (3) (2010) 558–571. doi: 10.1109/TFUZZ.2010.2042721), a synergy between SVMs and fuzzy logic aimed to reflect the within-class importance of different training examples in order to suppress the effect of outliers and noise. The idea is to assign different fuzzy membership values to positive and negative examples and to incorporate this information in the SVM learning algorithm, aimed to reduce the effect of outliers and noise when finding the separating hyperplane.

In C. Seiffert, T.M. Khoshgoftaar, J. Van Hulse, A. Folleco, An empirical study of the classification performance of learners on imbalanced and noisy software quality data, Information Sciences (2013), In press. doi: 10.1016/j.ins.2010.12.016 we may find an empirical study on the effect of class imbalance and class noise on different classification algorithms and data sampling techniques. From this study, the authors extracted three important lessons on the topic:

- Classification algorithms are more sensitive to noise than imbalance. However, as imbalance increases in severity, it plays a larger role in the performance of classifiers and sampling techniques.

- Regarding the preprocessing mechanisms, simple undersampling techniques such as random undersampling and ENN performed the best overall, at all levels of noise and imbalance. Peculiarly, as the level of imbalance is increased, ENN proves to be more robust in the presence of noise. Additionally, OSS consistently proves itself to be relatively unaffected by an increase in the noise level. Other techniques such as random oversampling, SMOTE or Borderline-SMOTE obtain good results on average, but do not show the same behavior as undersampling.

- Finally, the most robust classifiers tested over imbalanced and noisy data are bayesian classifiers and SVMs, performing better on average than rule induction algorithms or instance based learning. Furthermore, whereas most algorithms only experience small changes in AUC when imbalance was increased, the performance of Radial Basis Functions is significantly hindered when the imbalance ratio increases. For rule learning algorithms, the presence of noise degrades the performance more quickly than in other algorithms.

Additionally, in T.M. Khoshgoftaar, J. Van Hulse, A. Napolitano, Comparing boosting and bagging techniques with noisy and imbalanced data, IEEE Transactions on Systems, Man and Cybernetics, Part A: Systems and Humans 41 (3) (2011) 552–568. doi: 10.1109/TSMCA.2010.2084081, the authors presented a similar study on the significance of noise and imbalance data using bagging and boosting techniques. Their results show the goodness of the bagging approach without replacement, and they recommend the use of noise reduction techniques prior to the application of boosting procedures.

As a final remark, we show a brief experimental study on the effect of noise over a specific imbalanced problem such as the Subclus dataset (K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167.). Table 4 includes the results for C4.5 with no preprocessing (None) and four different approaches, namely random undersampling, SMOTE (N.V. Chawla, K.W. Bowyer, L.O. Hall, W.P. Kegelmeyer, SMOTE: synthetic minority over-sampling technique, Journal of Artificial Intelligent Research 16 (2002) 321–357. doi: 10.1613/jair.953), SMOTE+ENN (G.E.A.P.A. Batista, R.C. Prati, M.C. Monard, A study of the behaviour of several methods for balancing machine learning training data, SIGKDD Explorations 6 (1) (2004) 20–29. doi: 10.1145/1007730.1007735) and SPIDER2 (K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167.), a method designed for addressing noise and borderline examples, which will be detailed when discussing borderline examples.

This table is divided into two parts, the leftmost columns show the results with the original data and the columns in the right side show the results when adding a 20% of gaussian noise to the data. From this table we may conclude that in all cases the presence of noise degrades the performance of the classifier especially on the positive instances (TPrate). Regarding the preprocessing approaches, the best behavior is obtained by SMOTE+ENN and SPIDER2, both of which include a cleaning mechanism to alleviate the problem of noisy data, whereas the latter also oversample the borderline minority examples.

| Dataset | Original Data | 20% of Gaussian noise | ||||

| TPrate | TNrate | AUC | TPrate | TNrate | AUC | |

| None | 1.0000 | 0.9029 | 0.9514 | 0.0000 | 1.0000 | 0.5000 |

| RandomUnderSampling | 1.0000 | 0.7800 | 0.8900 | 0.9700 | 0.7400 | 0.8550 |

| SMOTE | 0.9614 | 0.9529 | 0.9571 | 0.8914 | 0.8800 | 0.8857 |

| SMOTE+ENN | 0.9676 | 0.9623 | 0.9649 | 0.9625 | 0.9573 | 0.9599 |

| SPIDER2 | 1.0000 | 1.0000 | 1.0000 | 0.9480 | 0.9033 | 0.9256 |

Borderline examples

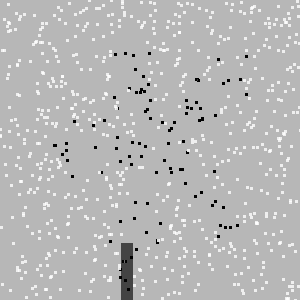

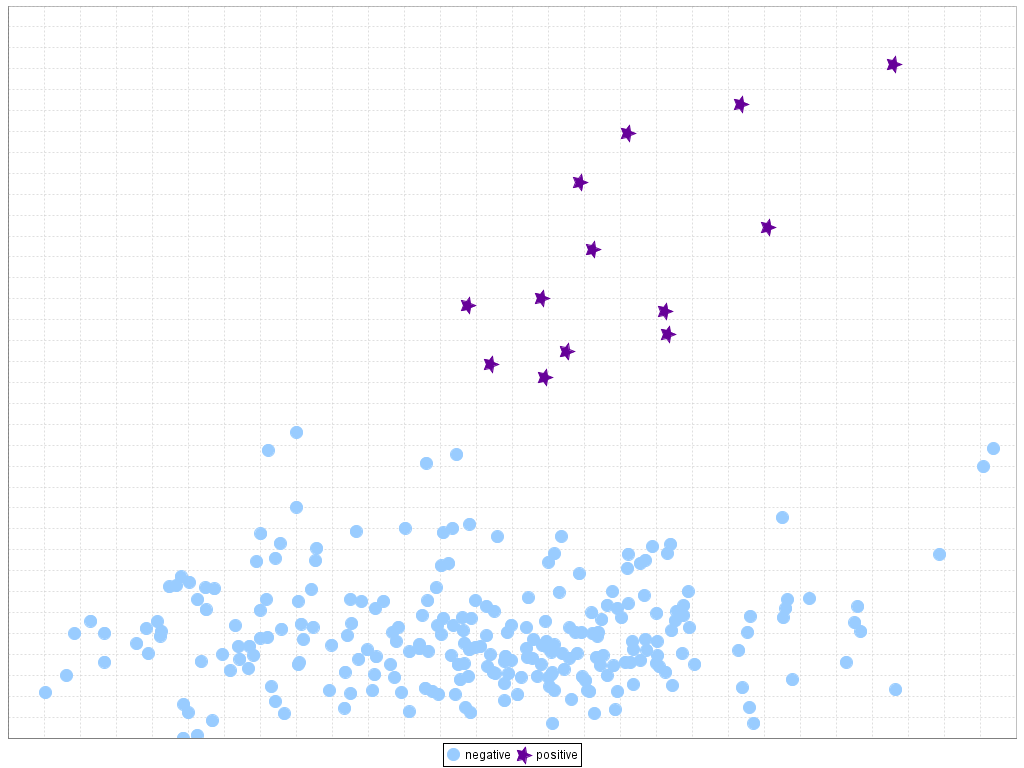

Inspired by M. Kubat, S. Matwin, Addressing the curse of imbalanced training sets: one-sided selection, in: Proceedings of the 14th International Conference on Machine Learning (ICML'97), 1997, pp. 179–186, we may distinguish between safe, noisy and borderline examples. Safe examples are placed in relatively homogeneous areas with respect to the class label. By noisy examples we understand individuals from one class occurring in safe areas of the other class, as introduced in the previous section. Finally, Borderline examples are located in the area surrounding class boundaries, where the minority and majority classes overlap. Figure 9 represents two examples given by K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–16., named "Paw and "Clover", respectively. In the former, the minority class is decomposed into 3 elliptic subregions, where two of them are located close to each other, and the remaining smaller sub-region is separated (upper right cluster). The latter also represents a non-linear setting, where the minority class resembles a flower with elliptic petals, which makes difficult to determine the boundaries examples in order to carry out a correct discrimination of the classes.

Figure 9. Example of data with difficult borderline examples

The problem of noisy data and the management of borderline examples are closely related, where the cleaning techniques can be used, or are the basis for detecting and emphasizing these borderline instances and, what is most important, to distinguish them from noisy instances that can degrade the overall classification. In brief, the better the definition of the borderline areas the more precise the discrimination between the positive and negative classes will be (D.J. Drown, T.M. Khoshgoftaar, N. Seliya, Evolutionary sampling and software quality modeling of high-assurance systems, IEEE Transactions on Systems, Man, and Cybernetics, Part A 39 (5) (2009) 1097–1107. doi: 10.1109/TSMCA.2009.2020804).

The family of SPIDER methods were proposed in J. Stefanowski, S. Wilk, Improving rule based classifiers induced by MODLEM by selective pre-processing of imbalanced data, in: Proceedings of the RSKD Workshop at ECML/PKDD'07, 2007, pp. 54–65 to ease the problem of the improvement of sensitivity at the cost of specificity that appears in the standard cleaning techniques. The SPIDER techniques works by combining a cleaning step of the majority examples with a local oversampling of the borderline minority examples (K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167, J. Stefanowski, S. Wilk, Improving rule based classifiers induced by MODLEM by selective pre-processing of imbalanced data, in: Proceedings of the RSKD Workshop at ECML/PKDD'07, 2007, pp. 54–65, J. Stefanowski, S. Wilk, Selective pre-processing of imbalanced data for improving classification performance, in: Proceedings of the 10th International Conference on Data Warehousing and Knowledge, Discovery (DaWaK08), 2008, pp. 283–292).

We may also find other related techniques such as the Borderline-SMOTE (H. Han, W.Y. Wang, B.H. Mao, Borderline–SMOTE: a new over–sampling method in imbalanced data sets learning, in: Proceedings of the 2005 International Conference on Intelligent Computing (ICIC'05), Lecture Notes in Computer Science, vol. 3644, 2005, pp. 878–887), which seeks to oversample the minority class instances in the borderline areas, by defining a set of "Danger" examples, i.e. those which are most likely to be misclassified since they appear in the borderline areas, from which SMOTE generates synthetic minority samples in the neighborhood of the boundaries.

Other approaches such as Safe-Level-SMOTE (C. Bunkhumpornpat, K. Sinapiromsaran, C. Lursinsap, Safe–level–SMOTE: Safe–level–synthetic minority over–sampling TEchnique for handling the class imbalanced problem. In: Proceedings of the 13th Pacific–Asia Conference on Advances in Knowledge Discovery and Data Mining PAKDD'09, 2009, pp. 475–482) and ADASYN (H. He, Y. Bai, E.A. Garcia, S. Li, ADASYN: adaptive synthetic sampling approach for imbalanced learning, in: Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IJCNN'08), 2008, pp. 1322–1328) work in a similar way. The former is based on the premise that previous approaches, such as SMOTE and Borderline-SMOTE, may generate synthetic instances in unsuitable locations, such as overlapping regions and noise regions; therefore, the authors compute a "safe-level" value for each positive instance before generating synthetic instances and generate them closer to the largest safe level. On the other hand, the key idea of the ADASYN algorithm is to use a density distribution as a criterion to automatically decide the number of synthetic samples that need to be generated for each minority example, by adaptively changing the weights of different minority examples to compensate the skewed distributions.

In V. López, A. Fernández, M.J. del Jesus, F. Herrera, A hierarchical genetic fuzzy system based on genetic programming for addressing classification with highly imbalanced and borderline data-sets, Knowledge-Based Systems 38 (2013) 85–104. doi: 10.1016/j.knosys.2012.08.025, the authors use a hierarchical fuzzy rule learning approach, which defines a higher granularity for those problem subspaces in the borderline areas. The results have shown to be very competitive for highly imbalanced datasets in which this problem is accentuated.

Finally, in K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167, the authors presented a series of experiments in which it is shown that the degradation in performance of a classifier is strongly affected by the number of borderline examples. They showed that focused resampling mechanisms (such as the Neighborhood Cleaning Rule (J. Laurikkala, Improving identification of difficult small classes by balancing class distribution, in: Proceedings of the 8th Conference on AI in Medicine in Europe: Artificial Intelligence Medicine (AIME'01), 2001, pp. 63–66) or SPIDER2 (K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167)) work well when the number of borderline examples is large enough whereas, on the contrary case, oversampling methods allow the improvement of the precision for the minority class.

The behavior of the SPIDER2 approach is shown in Table 5 for both the Paw and Clover problems. There are 10 different problems for each one of these datasets, depending on the number of examples and IR (600-5 or 800-7), and the "disturbance ratio" (K. Napierala, J. Stefanowski, S. Wilk, Learning from imbalanced data in presence of noisy and borderline examples, in: Proceedings of the 7th International Conference on Rough Sets and Current Trends in Computing (RSCTC'10), Lecture Notes on Artificial Intelligence, vol. 6086, 2010, pp. 158–167), defined as the ratio of borderline examples from the minority class subregions (0 to 70%). From these results we must stress the goodness of the SPIDER2 preprocessing step especially for those problems with a high disturbance ratio, which are harder to solve.

| Dataset | Disturbance | 600 examples - IR 5 | 800 examples - IR 7 | ||||||

| None | SPIDER2 | None | SPIDER2 | ||||||

| AUCTr | AUCTst | AUCTr | AUCTst | AUCTr | AUCTst | AUCTr | AUCTst | ||

| Paw | 0 | 0.9568 | 0.9100 | 0.9418 | 0.9180 | 0.7095 | 0.6829 | 0.9645 | 0.9457 |

| 30 | 0.7298 | 0.7000 | 0.9150 | 0.8260 | 0.6091 | 0.5671 | 0.9016 | 0.8207 | |

| 50 | 0.7252 | 0.6790 | 0.9055 | 0.8580 | 0.5000 | 0.5000 | 0.9114 | 0.8400 | |

| 60 | 0.5640 | 0.5410 | 0.9073 | 0.8150 | 0.5477 | 0.5300 | 0.8954 | 0.7829 | |

| 70 | 0.6250 | 0.5770 | 0.8855 | 0.8350 | 0.5000 | 0.5000 | 0.8846 | 0.8164 | |

| Average | 0.7202 | 0.6814 | 0.9110 | 0.8504 | 0.5732 | 0.5560 | 0.9115 | 0.8411 | |

| Clover | 0 | 0.7853 | 0.7050 | 0.7950 | 0.7410 | 0.7607 | 0.7071 | 0.8029 | 0.7864 |

| 30 | 0.6153 | 0.5430 | 0.9035 | 0.8290 | 0.5546 | 0.5321 | 0.8948 | 0.7979 | |

| 50 | 0.5430 | 0.5160 | 0.8980 | 0.8070 | 0.5000 | 0.5000 | 0.8823 | 0.7907 | |

| 60 | 0.5662 | 0.5650 | 0.8798 | 0.8100 | 0.5000 | 0.5000 | 0.8848 | 0.8014 | |

| 70 | 0.5000 | 0.5000 | 0.8788 | 0.7690 | 0.5250 | 0.5157 | 0.8787 | 0.7557 | |

| Average | 0.6020 | 0.5658 | 0.8710 | 0.7912 | 0.5681 | 0.5510 | 0.8687 | 0.7864 | |

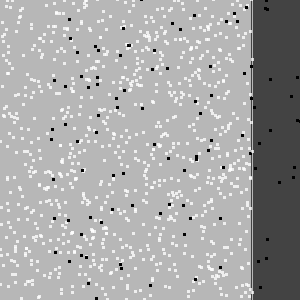

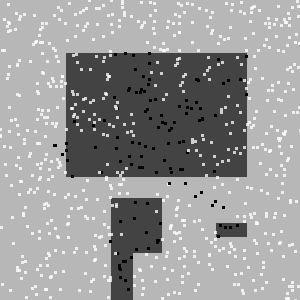

Additionally, and as a visual example of the behavior of this kind of methods, we show in Figures 10 and 11 the classification regions detected with C4.5 for the Paw and Clover problems using the original data and applying the SPIDER2 method. From these results we may conclude that the use of a methodology for stressing the borderline areas is very beneficial for correctly identifying the minority class instances.

Figure 10. Boundaries detected by C4.5 in the Paw problem (800 examples, IR 7 and disturbance ratio of 30)

Figure 11. Boundaries detected by C4.5 in the Clover problem (800 examples, IR 7 and disturbance ratio of 30)

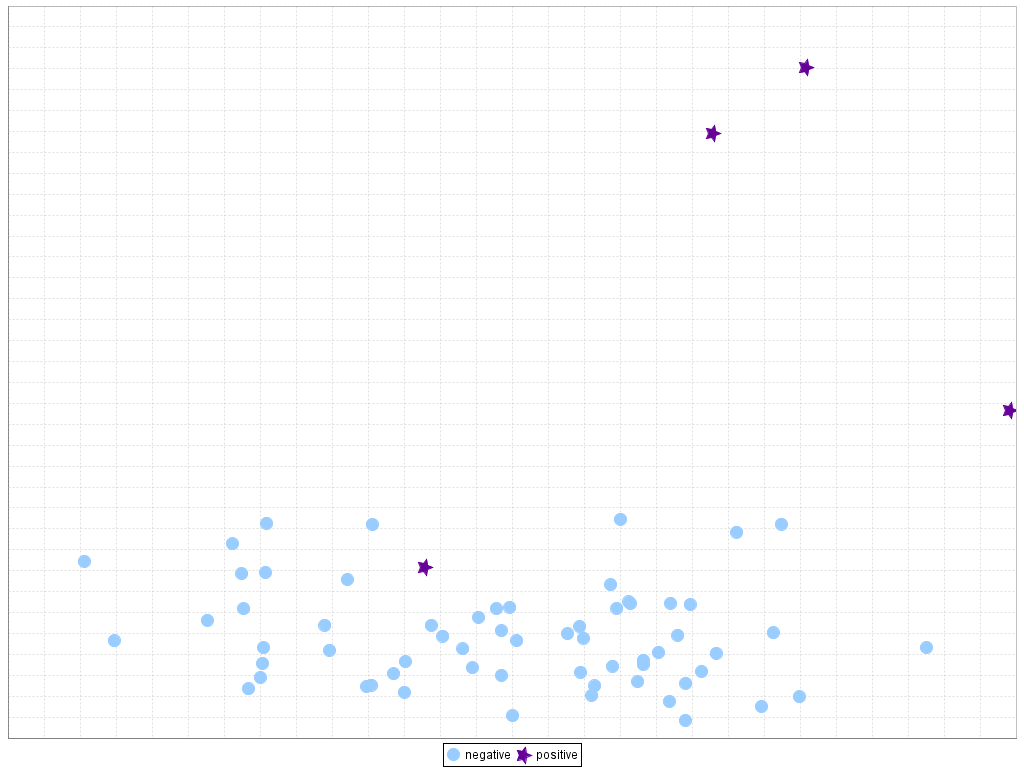

Dataset shift

The problem of dataset shift (R. Alaiz-Rodríguez, N. Japkowicz, Assessing the impact of changing environments on classifier performance, in: Proceedings of the 21st Canadian Conference on Advances in Artificial Intelligence (CCAI’08), Springer-Verlag, Berlin, Heidelberg, 2008, pp. 13–24, J.Q. Candela, M. Sugiyama, A. Schwaighofer, N.D. Lawrence, Dataset Shift in Machine Learning, The MIT Press, 2009, H. Shimodaira, Improving predictive inference under covariate shift by weighting the log-likelihood function, Journal of Statistical Planning and Inference 90 (2) (2000) 227–244. doi: 10.1016/S0378-3758(00)00115-4) is defined as the case where training and test data follow different distributions. This is a common problem that can affect all kind of classification problems, and it often appears due to sample selection bias issues. A mild degree of dataset shift is present in most real-world problems, but general classifiers are often capable of handling it without a severe performance loss.

However, the dataset shift issue is specially relevant when dealing with imbalanced classification, because in highly imbalanced domains, the minority class is particularly sensitive to singular classification errors, due to the typically low number of examples it presents (J.G. Moreno-Torres, F. Herrera, A preliminary study on overlapping and data fracture in imbalanced domains by means of genetic programming-based feature extraction, in: Proceedings of the 10th International Conference on Intelligent Systems Design and Applications (ISDA’10), 2010, pp. 501–506). In the most extreme cases, a single misclassified example of the minority class can create a significant drop in performance.

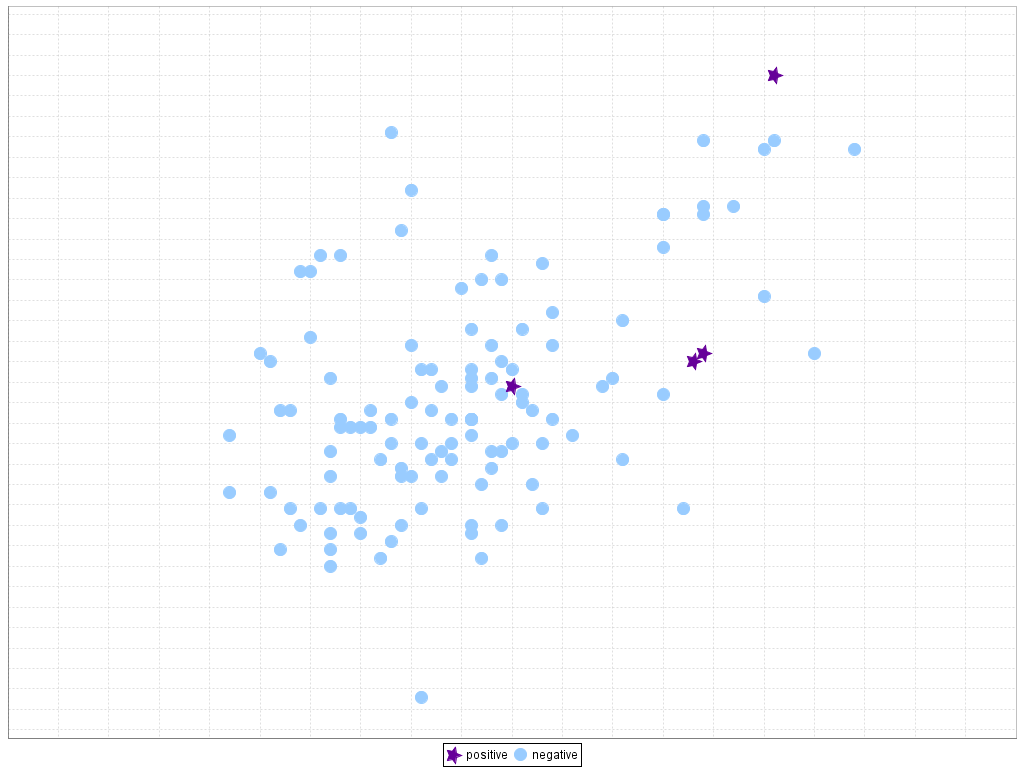

For clarity, Figures 12 and 13 present two examples of the influence of the dataset shift in imbalanced classification. In the first case (Figure 12), it is easy to see a separation between classes in the training set that carries over perfectly to the test set. However, in the second case (Figure 13), it must be noted how some minority class examples in the test set are at the bottom and rightmost areas while they are localized in other areas in the training set, leading to a gap between the training and testing performance. These problems are represented in a two-dimensional space by means of a linear transformation of the inputs variables, following the technique given in J.G. Moreno-Torres, F. Herrera, A preliminary study on overlapping and data fracture in imbalanced domains by means of genetic programming-based feature extraction, in: Proceedings of the 10th International Conference on Intelligent Systems Design and Applications (ISDA’10), 2010, pp. 501–506.

Figure 12. Example of good behavior (no dataset shift) in imbalanced domains: ecoli4 dataset, 5th partition

Figure 13. Example of bad behavior caused by dataset shift in imbalanced domains: ecoli4 dataset, 1st partition

Since the dataset shift is a highly relevant issue in imbalanced classification, it is easy to see why it would be an interesting perspective to focus on in future research regarding this topic. There are two different potential approaches in the study of the dataset shift in imbalanced domains:

- The first one focuses on intrinsic dataset shift, that is, the data of interest includes some degree of shift that is producing a relevant drop in performance. In this case, we may develop techniques to discover and measure the presence of dataset shift (D.A. Cieslak, N.V. Chawla, Analyzing pets on imbalanced datasets when training and testing class distributions differ, in: Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD08). Osaka, Japan, 2008, pp. 519–526, D.A. Cieslak, N.V. Chawla, A framework for monitoring classifiers’ performance: when and why failure occurs?, Knowledge and Information Systems 18 (1) (2009) 83–108. doi: 10.1007/s10115-008-0139-1, Y. Yang, X. Wu, X. Zhu, Conceptual equivalence for contrast mining in classification learning, Data & Knowledge Engineering 67 (3) (2008) 413–429. doi: 10.1016/j.datak.2008.07.001), but adapting them to focus on the minority class. Furthermore, we may design algorithms that are capable of working under dataset shift conditions, either by means of preprocessing techniques (J.G. Moreno-Torres, X. Llorà, D.E. Goldberg, R. Bhargava, Repairing fractures between data using genetic programming-based feature extraction: a case study in cancer diagnosis, Information Sciences 222 (2013) 805–823. doi: 10.1016/j.ins.2010.09.018) or with ad hoc algorithms (R. Alaiz-Rodríguez, A. Guerrero-Curieses, J. Cid-Sueiro, Improving classification under changes in class and within-class distributions, in: Proceedings of the 10th International Work-Conference on Artificial Neural Networks (IWANN ’09), Springer-Verlag, Berlin, Heidelberg, 2009, pp. 122–130, S. Bickel, M. Brückner, T. Scheffer, Discriminative learning under covariate shift, Journal of Machine Learning Research 10 (2009) 2137–2155. doi: 10.1145/1577069.1755858, A. Globerson, C.H. Teo, A. Smola, S. Roweis, An adversarial view of covariate shift and a minimax approach, in: J. Quiñonero Candela, M. Sugiyama, A. Schwaighofer, N.D. Lawrence (Eds.), Dataset Shift in Machine Learning, The MIT Press, 2009, pp. 179–198). In both cases, we are not aware of any proposals in the literature that focus on the problem of imbalanced classification in the presence of dataset shift.

- The second approach in terms of dataset shift in imbalanced classification is related to induced dataset shift. Most current state of the art research is validated through stratified cross-validation techniques, which are another potential source of shift in the learning process. A more suitable validation technique needs to be developed in order to avoid introducing dataset shift issues artificially.

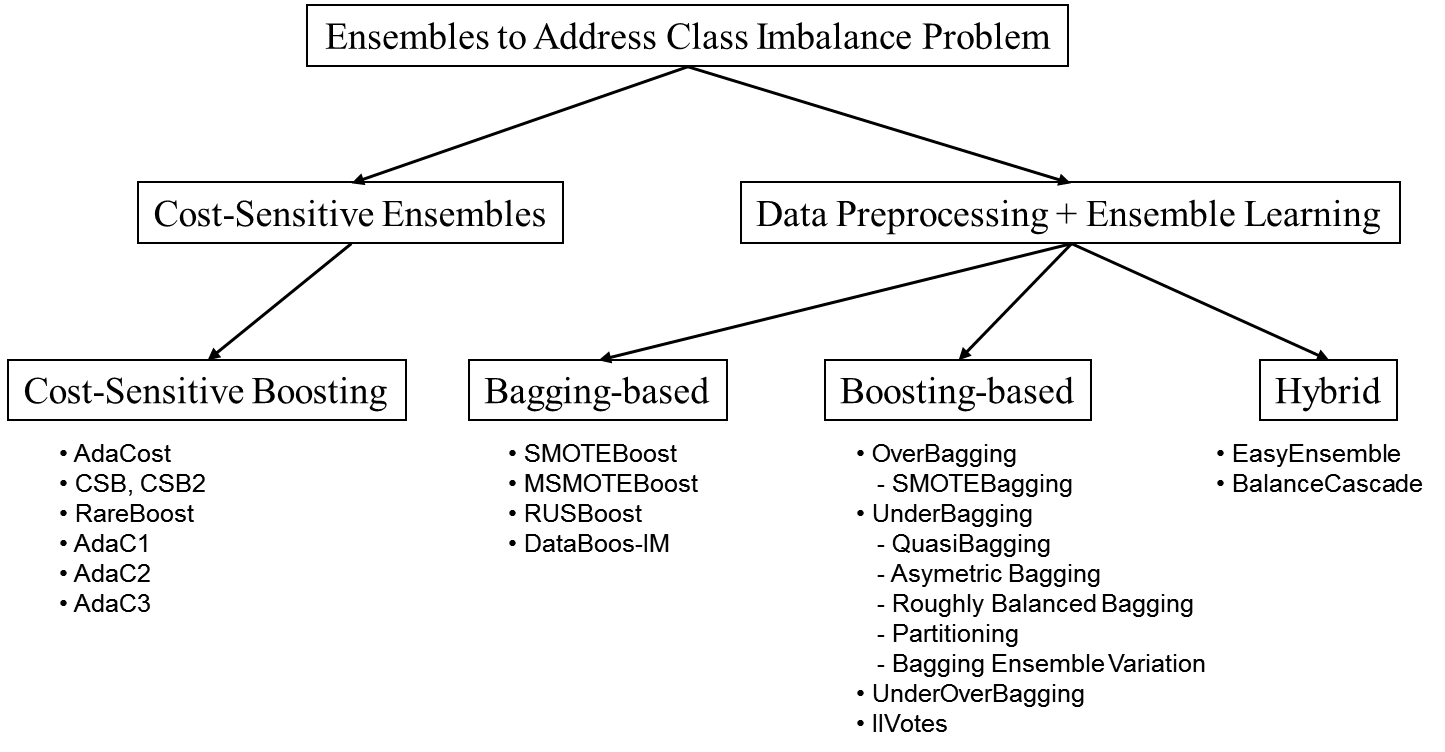

Addressing Classification with imbalanced data: preprocessing, cost-sensitive learning and ensemble techniques