SMOTE-IPF: Addressing the Noisy and Borderline Examples Problem in Imbalanced Classification by a Re-sampling Method with Filtering

This Website contains the complementary material to the paper:

José A. Sáez, Julián Luengo, Jerzy Stefanowski, Francisco Herrera, SMOTE-IPF: Addressing the Noisy and Borderline Examples Problem in Imbalanced Classification by a Re-sampling Method with Filtering. Information Sciences, submitted.

The web is organized according to the following summary:

Abstract

Classification datasets often have an unequal class distribution among their examples. This problem is known as imbalanced classification. The Synthetic Minority Over-sampling Technique (SMOTE) is one of the most well-known methods to cope with it and to balance the different number of examples of each class. However, as recent works claim, class imbalance is not a problem in itself and performance degradation is also associated with other factors related to the distribution of the data. One of them is the presence of noisy and borderline examples, the latter lying in the areas surrounding class boundaries. Intrinsic limitations of SMOTE can aggravate the problem produced by these types of examples and actual generalizations of SMOTE are neither correctly adapted to their treatment.

This paper proposes to extend SMOTE by a new element, an iterative ensemble-based noise filter called Iterative-Partitioning Filter (IPF), which can overcome the problems produced by noisy and borderline examples in imbalanced datasets. This extension results in SMOTE-IPF. The properties of this proposal are discussed in a comprehensive experimental study. It is compared against a basic SMOTE and its most well-known generalizations. The experiments are carried out both on a set of synthetic datasets with different levels of noise and shapes of borderline examples as well as real-world ones. Furthermore, the impact of introducing additional different types and levels of noise into these real-world data is studied. The results show that the new proposal performs better than exiting SMOTE generalizations for all these different scenarios. The analysis of these results also helps to identify the characteristics of IPF which differentiate it from other filtering approaches.

Datasets

Re-sampling methods

- SMOTE in its basic version. The implementation of SMOTE used in this paper considers 5 nearest neighbors, the HVDM metric to compute the distance between examples and balances both classes to 50%.

- SMOTE + Tomek Links. This method uses tomek links (TL) to remove examples after applying SMOTE, which are considered being noisy or lying in the decision border. A tomek link is defined as a pair of examples x and y from different classes, that there exists no example z such that d(x,z) is lower than d(x,y) or d(y,z) is lower than d(x,y), where d is the distance metric.

- SMOTE-ENN. ENN tends to remove more examples than the TL does, so it is expected that it will provide a more in depth data cleaning. ENN is used to remove examples from both classes. Thus, any example that is misclassified by its three nearest neighbors is removed from the training set.

- SL-SMOTE. This method assigns each positive example its so called safe level before generating synthetic examples. The safe level of one example is defined as the number of positive instances among its k nearest neighbors. Each synthetic example is positioned closer to the example with the largest safe level so all synthetic examples are generated only in safe regions.

- Borderline-SMOTE. This method only oversamples or strengthens the borderline minority examples. First, it finds out the borderline minority examples P, defined as the examples of the minority class with more than half, but not all, of their m nearest neighbors belonging to the majority class. Finally, for each of those examples, we calculate its k nearest neighbors from P (for the algorithm version B1-SMOTE) or from all the training data, also with majority examples (for the algorithm version B2-SMOTE) and operate similarly to SMOTE. Then, synthetic examples are generated from them and added to the original training set.

Classifiers and Parameters

- C4.5 is a decision tree generating algorithm. It has been used in many recent analyses of imbalanced data. It induces classification rules in the form of decision trees from a set of given examples. The decision tree is constructed in a top-down way, using the normalized information gain (difference in entropy) that results from choosing an attribute to split the data. The attribute with the highest normalized information gain is the one used to make the decision.

- k-Nearest Neighbors (k-NN) finds a group of k instances in the training set that are closest to the test pattern. The predicted class label is based on the predominance of a particular class in this neighborhood. The distance and the number of neighbors are key elements of this algorithm.

- Support Vector Machine (SVM) maps the original input space into a high-dimensional feature space via a certain kernel function (avoiding the computation of the inner product of two vectors). In the new feature space, the optimal separating hyperplane with maximal margin is determined in order to minimize an upper bound of the expected risk instead of the empirical risk. We use SMO training algorithm to obtain the SVM classifiers.

- Repeated Incremental Pruning to Produce Error Reduction (RIPPER) builds a decision list of rules to predict the corresponding class for each instance. The list of rules is grown one by one and immediately pruned. Once a decision list for a given class is completely learned, an optimization stage is performed.

- PART is based on C4.5 algorithm. In each iteration, a partial C45 tree is generated and its best rule extracted (the one wich cover more examples). The algorithm ends when all the examples are covered. A partial tree is one whose braches are not fully explored. When a node has grown all its children, the node is eligible to prune. If the node is not pruned, all the exploration ends.

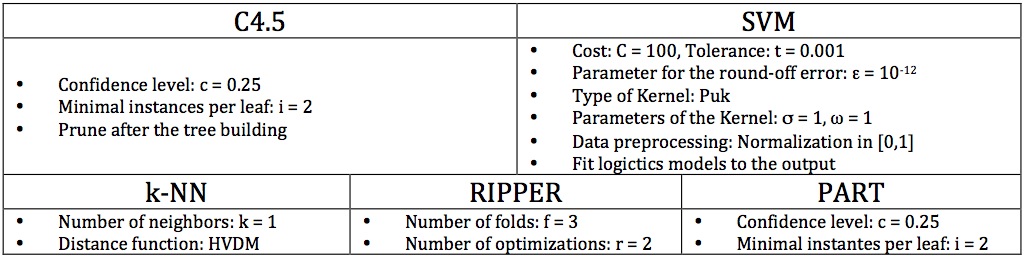

The classification algorithms have been executed using the parameters shown in the following table:

The implementation can be found in the KEEL Software Tool

Results

Performance Results

| Datasets | C4.5 | k-NN | SVM | RIPPER | PART |

|---|---|---|---|---|---|

| Synthetic | |||||

| Real-world | |||||

| Class Noise - 20% | |||||

| Class Noise - 40% | |||||

| Attribute Noise - 20% | |||||

| Attribute Noise - 40% |